Abstract

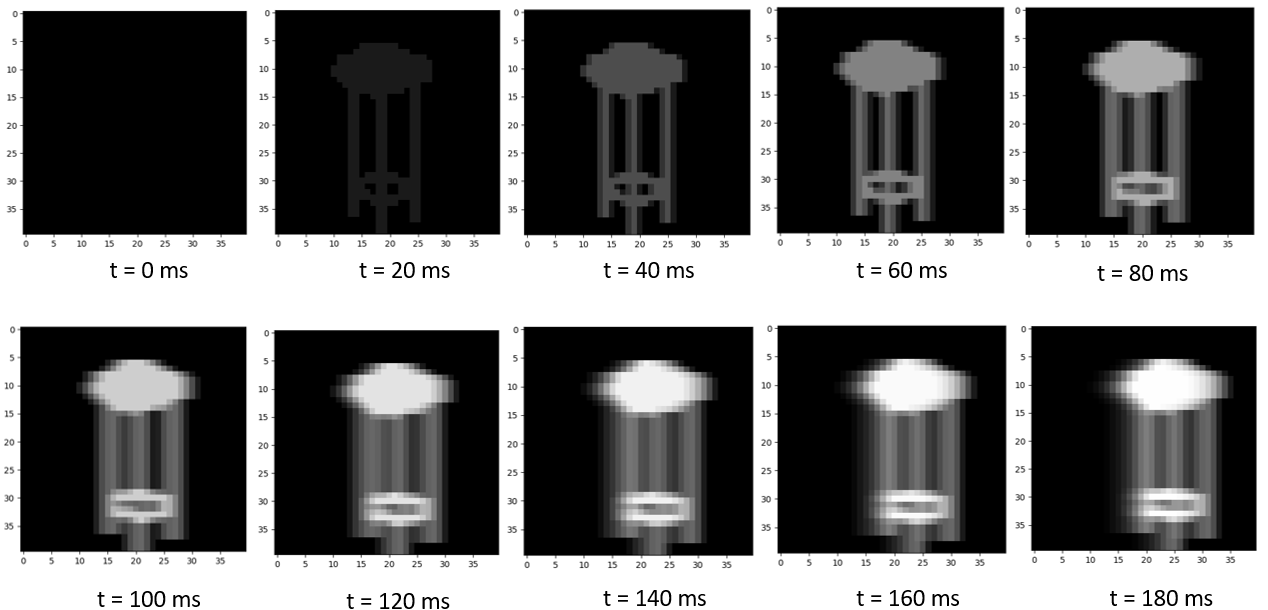

Recent advancements have demonstrated the potential of visual prostheses in partially restoring vision for certain types of visual impairment. However, due to their limitations, there is a growing interest in developing computer vision techniques to extract pertinent information from the surroundings and adapt it for use with these prostheses. Evaluating the effectiveness of such methods is challenging due to the scarcity of individuals who have undergone the implantation procedure. To overcome this, visual prosthesis simulators are being used, enabling experimentation with individuals possessing healthy vision. This research introduces a novel and immersive simulator integrated within a robotics framework, specifically designed for testing various modes of representation using virtual reality goggles. Notably, this simulator incorporates a temporal model inspired by real patient experiments, emphasizing the time dimension in generating visual stimuli. Additionally, the simulator offers complete user immersion within a virtual environment that incorporates a semantic segmentation neural network, aiding in object and person detection.